TL;DR

Motivated by a friend, we’ll share bits of our experience during the Olympic Games Rio 2016. Before starting, I would like to clarify that Globo.com only had rights for streaming the content to Brazil.

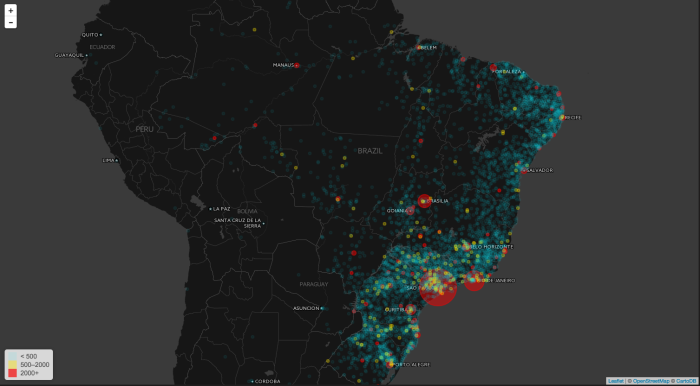

We used around 5.5 TB of memory with 1056 CPU’s across two PoP’s located on the southeast of the country.

Not so long; I’ll read it

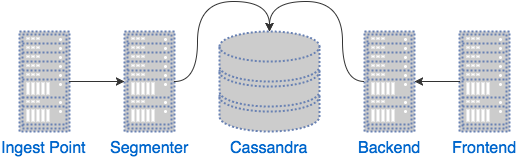

The live streaming infrastructure for the Olympics was an enhancement iteration over the previous architecture for FIFA 2014 World Cup.

The ingest point receives an RTMP input using nginx-rtmp and then forwards the RTMP to the segmenter. This extra layer provides mostly scheduling, resource sharing and security.

The segmenter uses EvoStream to generate HLS in a known folder watched by a python daemon and then this daemon sends video data and metadata to a cassandra cluster, which is used mostly as a queue.

Now let’s move to the user point of view. When the player wants to play a video, it needs to get a video chunk, requesting a file from our front-end, which provides caching, security, load balancing using nginx.

Network tip:

Modern network cards offers multiple-queues: pin each queue, XPS, RPS to a specific cpu.

When this front-end does not have the requested chunk it goes to the backend which uses nginx with lua to generate the playlist and serve the video chunks from cassandra.

Caching tip:

Use RAM to cache: a dual layer caching solution, caching the hot content (most current) on tmpFS and the colder content (older) on disk might decrease the CPU load, disk IOPS and response time.

You can find a more detailed view about the nginx usage at a two part article posted at nginx.com: caching and micro-services and a summary from Juarez Bochi.

This is just a macro view, for sure we also had to provide and scale many micro services to offer things like live thumb, electronic program guide, better usage of the ISP bandwidth, geofencing and others. We deployed them either on bare metal or tsuru.

In the near future we might investigate other adaptive stream format like dash, explore other kinds of input (not only RTMP), increase the number of bitrates, promote a better usage of our farm and distribute the content near of the final user.

Thanks @paulasmuth for pointing out some errors.

[…] Source: More than 400 Tb of live streaming were transferred during the Olympic Games Rio 2016 | Leandro More… […]

[…] More than 400 Tb of live streaming were transferred during the Olympic Games Rio 2016 (In Brazil alo… […]

None of these numbers seem to add up?

If 30 million hours were watched with an average bandwidth of 2Mbps, the total data volume should be 25,749TB (or close to 26PB) and not 400TB.

Also, if the peak bandwidth was 600Gpbs, that peak could not have lasted very long. With that much bandwidth the claimed 400TB of total transferred data would be used up in roughly 90 minutes.

So either the total data transferred or the number of hours watched figure is off by a factor of at least 60x.

[ I figured “400Tb” was supposed to mean 400 terrabytes. If it’s actually 400 terrabits, the numbers are off by one magnitude more ]

Thanks for the questions Paul but first let me clarify that all these numbers were gathered through SNPM or other way, none of them were made up from our heads.

About the number of hours, yeah it’s weird I really don’t remember if we took VOD videos too to sum the number of hours watched (I’ll remove this number and until I figure out the correct). And about many of these avg we didn’t post the deviation which is pretty high.

I misunderstood the number of Tb (it was showing 4xx.xxx on Grafana and I wrongly read 4xx) therefore it’s more than 400000.

About the peaks, they really don’t hold too much time, I’ll leave you a graph that shows that.

Thank you very much for point these things to us.

None of these numbers add up.

If 30 million hours were watched with an average bandwidth of 2Mbps, the total data volume should be 25,749TB (or close to 26PB) and not 400TB.

Also, if the peak bandwidth was 600Gpbs, that peak could not have lasted very long. With that much bandwidth the claimed 400TB of total transferred data would be used up in roughly 90 minutes.

So either the total data transferred or the number of hours watched figure is off by a factor of at least 60x.

[ I figured “400Tb” was supposed to mean 400 terrabytes. If it’s actually 400 terrabits, the numbers are off by one magnitude more ]